DOI:

https://doi.org/10.14483/22487638.22360Publicado:

28-12-2024Número:

Vol. 28 Núm. 82 (2024): Octubre - DiciembreSección:

RevisiónMonitoreo de cultivos y suelos en agricultura de precisión con UAV e inteligencia artificial: una revisión

Crop and Soil Monitoring in Precision Agriculture with UAVs and Artificial Intelligence: A Review

Palabras clave:

Precision agriculture, UAV, remote sensing, artificial intelligence, machine learning, fertilization, biomass, yield prediction, technological adoption (en).Palabras clave:

agricultura de precisión, UAV, detección remota, inteligencia artificial, aprendizaje automático, fertilización, biomasa, predicción de rendimiento, apropiación tecnológica (es).Descargas

Resumen (es)

Contexto: la creciente demanda global de alimentos, junto con los retos ambientales y sociales asociados a la intensificación agrícola, ha impulsado el desarrollo de soluciones tecnológicas que mejoren la eficiencia y sostenibilidad de la producción. En este escenario, la agricultura de precisión, apoyada en vehículos aéreos no tripulados (unmanned aerial vehicle [UAV]) y en inteligencia artificial (IA), se posiciona como una herramienta clave para el monitoreo detallado de cultivos y suelos.

Objetivo: este artículo presenta una revisión estructurada de la literatura científica sobre técnicas de detección remota basadas en UAV, con énfasis en aplicaciones orientadas a la estimación de niveles de fertilización, biomasa aérea, predicción de rendimiento y detección de plagas y malezas en sistemas agrícolas.

Metodología: se efectuó una búsqueda sistemática en bases de datos académicas (Scopus y Web of Science), mediante combinaciones de términos clave relacionados con agricultura de precisión, UAV, teledetección, IA y monitoreo agronómico. Se recurrió a criterios de selección rigurosos que resultaron en la inclusión de 62 artículos para análisis. La información se sintetizó mediante un enfoque comparativo de técnicas, sensores, algoritmos y métricas de desempeño.

Resultados: la revisión evidenció una tendencia creciente hacia el uso de UAV equipados con sensores RGB, multiespectrales, hiperespectrales y LiDAR, junto con técnicas de aprendizaje automático y profundo, para estimar parámetros clave del cultivo como el índice de área foliar (leaf area index [LAI]), contenido de nitrógeno y rendimiento. Se identificaron enfoques prometedores basados en fusión multimodal de datos y modelos híbridos (CNN + GRU, ensambles), capaces de superar limitaciones de métodos clásicos como la saturación espectral. Sin embargo, se detectó escasa disponibilidad de bases de datos abiertas y poca estandarización en los protocolos de adquisición, lo que dificulta la replicabilidad y generalización de los modelos.

Conclusiones: el uso integrado de UAV e IA representa una herramienta transformadora para la gestión agrícola inteligente. No obstante, su implementación efectiva requiere superar barreras técnicas, económicas y estructurales; además, debe promover el acceso abierto a datos y el desarrollo de soluciones contextualizadas. Esta revisión destaca la importancia de avanzar hacia sistemas más explicables, ligeros y adaptables, así como de fomentar una transformación digital agrícola inclusiva y responsable.

Resumen (en)

Background: The growing global demand for food, along with the environmental and social challenges associated with agricultural intensification, has driven the development of technological solutions aimed at improving the efficiency and sustainability of food production. In this context, precision agriculture, supported by unmanned aerial vehicles (UAVs) and artificial intelligence (AI), emerges as a key tool for the detailed monitoring of crops and soils.

Objective: This article presents a structured review of the scientific literature on UAV-based remote sensing techniques, with an emphasis on applications aimed at estimating fertilization levels, aboveground biomass, yield prediction, and the detection of pests and weeds in agricultural systems.

Methodology: A systematic search was conducted in academic databases (Scopus and Web of Science), using combinations of key terms related to precision agriculture, UAV, remote sensing, AI, and agronomic monitoring. Rigorous inclusion criteria were applied, resulting in the selection of 62 articles for analysis. The information was

synthesized through a comparative approach of techniques, sensors, algorithms, and performance metrics.

Results: The review highlights a growing trend in the use of UAVs equipped with RGB, multispectral, hyperspectral, and LiDAR sensors, combined with machine learning and deep learning techniques, to estimate key crop parameters such as leaf area index (LAI), nitrogen content, and yield. Promising approaches were identified based

on multimodal data fusion and hybrid models (CNN + GRU, ensemble methods), capable of overcoming limitations of classical methods such as spectral saturation. However, a lack of open-access datasets and limited standardization in data acquisition protocols were observed, which hinders the replicability and generalization of models.

Conclusions: The integrated use of UAVs and AI represents a transformative tool for smart agricultural management. Nevertheless, effective implementation requires overcoming technical, economic, and structural barriers, as well as promoting open data access and the development of context-aware solutions. This review underscores the importance of advancing toward more explainable, lightweight, and adaptable systems, and fostering an inclusive and responsible digital transformation of agriculture.

Referencias

D. W. James, y K. L. Wells, Soil sample collection and handling: technique based on source and degree of field variability, Hoboken, NJ: John Wiley & Sons, 1990, pp. 25-44. ↑2

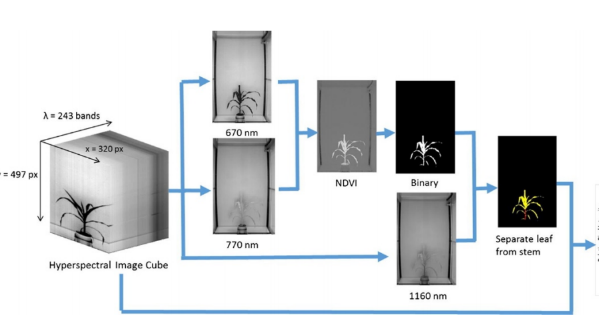

M. B. Stuart, A. J. S. McGonigle, y J. R. Willmott, “Hyperspectral imaging in environmental monitoring: a review of recent developments and technological advances in compact field deployable systems”, Sensors, vol. 19, n.º 1414, p. 3071, en. 2019. ↑3

S. Khanal, K. C. Kushal, J. P. Fulton, et al., “Remote sensing in agriculture—Accomplishments, limitations, and opportunities”, Remote Sensing, vol. 12, n.º 22, p. 3783, nov. 2020. ↑3

Y. Ge, G. Bai, V. Stoerger, et al., “Temporal dynamics of maize plant growth, water use, and leaf water content using automated high throughput RGB and hyperspectral imaging”, Computers and Electronics in Agriculture, vol. 127, pp. 625–632, sep. 2016. Disponible en: https://www.sciencedirect.com/science/article/pii/S0168169916305464 ↑3

E. Salamí, C. Barrado, y E. Pastor, “UAV flight experiments applied to the remote sensing of vegetated areas”, Remote Sensing, vol. 6, n.º 11, pp. 11051–11081, nov. 2014. Disponible en: https://www.mdpi.com/2072-4292/6/11/11051 ↑3, 4

H. Yao, R. Qin, y X. Chen, “Unmanned aerial vehicle for remote sensing applications—A review”, Remote Sensing, vol. 11, n.º 1212, p. 1443, en. 2019. ↑3

G. Pajares, “Overview and current status of remote sensing applications based on unmanned aerial vehicles (UAVs)”, Photogrammetric Engineering Remote Sensing, vol. 81, n.º 4, pp. 281–329, abr. 2015. ↑4

I. Colomina, y P. Molina, “Unmanned aerial systems for photogrammetry and remote sensing: a review”, ISPRS Journal of Photogrammetry and Remote Sensing, vol. 92, pp. 79–97, jun. 2014. ↑4

P. Daponte, L. De Vito, L. Glielmo, et al., “A review on the use of drones for precision agriculture”, IOP Conference Series: Earth and Environmental Science, vol. 275, n.º 1, p. 012022, my. 2019. Disponible en: https://dx.doi.org/10.1088/1755-1315/275/1/012022 ↑4

J. Xue, y B. Su, “Significant remote sensing vegetation indices: a review of developments and applications”, Journal of Sensors, vol. 2017, n.º 1, p. 1353691, en. 2017. Disponible en: https://onlinelibrary.wiley.com/doi/abs/10.1155/2017/1353691 ↑4

X. Zhang, F. Zhang, Y. Qi, et al., “New research methods for vegetation information extraction based on visible light remote sensing images from an unmanned aerial vehicle (UAV)”, International Journal of Applied Earth Observation and Geoinformation, vol. 78, pp. 215–226, jun. 2019. Disponible en: https://linkinghub.elsevier.com/retrieve/pii/S0303243418306305 ↑4

R. Vidican, A. Mălinaș, O. Ranta, et al., “Using remote sensing vegetation indices for the discrimination and monitoring of agricultural crops: a critical review”, Agronomy, vol. 13, n.º 12, p. 3040, dic. 2023. Disponible en: https://www.mdpi.com/2073-4395/13/12/3040 ↑4

D. Radočaj, A. Šiljeg, R. Marinović, et al., “State of major vegetation indices in precision agriculture studies indexed in Web of Science: a review”, Agriculture, vol. 13, n.º 3, p. 707, mzo. 2023. Disponible en: https://www.mdpi.com/2077-0472/13/3/707 ↑4

J. G. A. Barbedo, “A review on the use of unmanned aerial vehicles and imaging sensors for monitoring and assessing plant stresses”, Drones, vol. 3, n.º 2, p. 40, jun. 2019. Disponible en: https://www.mdpi.com/2504-446X/3/2/404 ↑4

S. Gokool, M. Mahomed, R. Kunz, et al., “Crop monitoring in smallholder farms using unmanned aerial vehicles to facilitate precision agriculture practices: a scoping review and bibliometric analysis”, Sustainability, vol. 15, n.º 4, p. 3557, en. 2023. Disponible en: https://www.mdpi.com/2071-1050/15/4/3557 ↑4

M. Schirrmann, A. Giebel, F. Gleiniger, et al., “Monitoring agronomic parameters of winter wheat crops with low-cost UAV imagery”, Remote Sensing, vol. 8, n.º 99, p. 706, sep. 2016. https://doi.org/10.3390/rs8090706 ↑8

J. Wu, D. Zheng, Z. Wu, et al., “Prediction of buckwheat maturity in UAV-RGB images based on recursive feature elimination cross-validation: a case study in Jinzhong, northern China”, Plants, vol. 11, n.º 2323, p. 3257, en. 2022. Disponible en: https://doi.org/10.3390/plants11233257 ↑9

S. B. Khose, y D. R. Mailapalli, “UAV-based multispectral image analytics and machine learning for predicting crop nitrogen in rice”, Geocarto International, vol. 39, n.º 1, en. 2024. Disponible en: https://doi.org/10.1080/10106049.2024.2373867 ↑9, 10

R. N. Sahoo, R. G. Rejith, S. Gakhar, et al., “Drone remote sensing of wheat N using hyperspectral sensor and machine learning”, Precision Agriculture, vol. 25, n.º 2, pp. 704–728, abr. 2024. Disponible en: https://doi.org/10.1007/s11119-023-10089-7 ↑9

N. Lu, Y. Wu, H. Zheng, et al., “An assessment of multi-view spectral information from UAV-based color-infrared images for improved estimation of nitrogen nutrition status in winter wheat”, Precision Agriculture, vol. 23, n.º 5, pp. 1653–1674, oct. 2022. ↑9, 10, 11

X. Peng, D. Chen, Z. Zhou, et al., “Prediction of the nitrogen, phosphorus and potassium contents in grape leaves at different growth stages based on UAV multispectral remote sensing”, Remote Sensing, vol. 14, n.º 11, jun. 2022. ↑9, 10, 11

H. Zha, Y. Miao, T. Wang, et al., “Improving unmanned aerial vehicle remote sensing-based rice nitrogen nutrition index prediction with machine learning”, Remote Sensing, vol. 12, n.º 2, en. 2020. ↑9, 11, 12, 13, 14

U. Lussem, A. Bolten, I. Kleppert, et al., “Herbage mass, N concentration, and N uptake of temperate grasslands can adequately be estimated from UAV-based image data using machine learning”, Remote Sensing, vol. 14, n.º 13, jul. 2022. ↑9, 11, 12, 13

Z. Cheng, X. Gu, Y. Du, et al., “Multi-modal fusion and multi-task deep learning for monitoring the growth of film-mulched winter wheat”, Precision Agriculture, vol. 25, n.º 4, pp. 1933–1957, ag. 2024. ↑9, 11, 12, 13

S.-H. Zhang, L. He, J.-Z. Duan, et al., “Aboveground wheat biomass estimation from a low-altitude UAV platform based on multimodal remote sensing data fusion with the introduction of terrain factors”, Precision Agriculture, vol. 25, n.º 1, pp. 119–145, feb. 2024. ↑9, 11, 12, 13, 14

S. Zhu, W. Zhang, T. Yang, et al., “Combining 2D image and point cloud deep learning to predict wheat above ground biomass”, Precision Agriculture, vol. 25, n.º 6, pp. 3139–3166, dic. 2024. ↑9, 11, 12, 13, 14

Y. Guan, K. Grote, J. Schott, et al., “Prediction of soil water content and electrical conductivity using random forest methods with UAV multispectral and ground-coupled geophysical data”, Remote Sensing, vol. 14, n.º 4, feb. 2022. ↑9, 10

R. N. Sahoo, S. Gakhar, R. Rejith, et al., “Unmanned aerial vehicle (UAV)–based imaging spectroscopy for predicting wheat leaf nitrogen”, Photogrammetric Engineering & Remote Sensing, vol. 89, n.º 2, pp. 107–116, feb. 2023. ↑10, 11

H. Hammouch, S. Patil, S. Choudhary, et al., “Hybrid-AI and model ensembling to exploit UAV-based RGB imagery: an evaluation of sorghum crop’s nitrogen content”, Agriculture-Basel, vol. 14, n.º 10, oct. 2024. ↑10, 11

S. Xu, X. Xu, Q. Zhu, et al., “Monitoring leaf nitrogen content in rice based on information fusion of multi-sensor imagery from UAV”, Precision Agriculture, vol. 24, n.º 6, pp. 2327–2349, dic. 2023. ↑10

A. Jenal, H. Hueging, H. E. Ahrends, et al., “Investigating the potential of a newly developed UAV-mounted VNIR/SWIR imaging system for monitoring crop traits-a case study for winter wheat”, Remote Sensing, vol. 13, n.º 9, my. 2021. ↑11, 12, 13, 14

Z. Fu, J. Jiang, Y. Gao, et al., “Wheat growth monitoring and yield estimation based on multi-rotor unmanned aerial vehicle”, Remote Sensing, vol. 12, n.º 3, p. 508, en. 2020. Disponible en: https://www.mdpi.com/2072-4292/12/3/508 ↑11, 12

A. Ashapure, J. Jung, A. Chang, et al., “Developing a machine learning based cotton yield estimation framework using multi-temporal UAS data”, ISPRS Journal of Photogrammetry and Remote Sensing, vol. 169, pp. 180–194, nov. 2020. ↑12

K. C. Kushal, M. Romanko, A. Perrault, et al., “On-farm cereal rye biomass estimation using machine learning on images from an unmanned aerial system”, Precision Agriculture, vol. 25, n.º 5, pp. 2198–2225, oct. 2024. ↑12, 13, 14

M. Bian, Z. Chen, Y. Fan, et al., “Integrating spectral, textural, and morphological data for potato LAI estimation from UAV images”, Agronomy-Basel, vol. 13, n.º 12, dic. 2023. ↑12

Q. Cheng, F. Ding, H. Xu, et al., “Quantifying corn LAI using machine learning and UAV multispectral imaging”, Precision Agriculture, vol. 25, n.º 4, pp. 1777–1799, ag. 2024. ↑12

X. Lu, W. Li, J. Xiao, et al., “Inversion of leaf area index in citrus trees based on multi-modal data fusion from UAV platform”, Remote Sensing, vol. 15, n.º 14, jul. 2023. ↑12

J. Jiang, K. Johansen, C. S. Stanschewski, et al., “Phenotyping a diversity panel of quinoa using UAV-retrieved leaf area index, SPAD-based chlorophyll and a random forest approach”, Precision Agriculture, vol. 23, n.º 3, pp. 961–983, jun. 2022. ↑12

R. A. Oliveira, R. Naesi, P. Korhonen, et al., “High-precision estimation of grass quality and quantity using UAS-based VNIR and SWIR hyperspectral cameras and machine learning”, Precision Agriculture, vol. 25, n.º 1, pp. 186–220, febr. 2024. ↑12

R. Mukhamediev, Y. Amirgaliyev, Y. Kuchin, et al., “Operational mapping of salinization areas in agricultural fields using machine learning models based on low-altitude multispectral images”, Drones, vol. 7, n.º 6, jun. 2023. ↑12

Y. Gan, Q. Wang, T. Matsuzawa, et al., “Multivariate regressions coupling colorimetric and textural features derived from UAV-based RGB images can trace spatiotemporal variations of LAI well in a deciduous forest”, International Journal of Remote Sensing, vol. 44, n.º 15, pp. 4559–4577, ag. 2023. ↑12

T. W. Bell, N. J. Nidzieko, D. A. Siegel, et al., “The utility of satellites and autonomous remote sensing platforms for monitoring offshore aquaculture farms: a case study for canopy forming kelps”, Frontiers in Marine Science, vol. 7, dic. 2020. Disponible en: https://www.frontiersin.org/journals/marine-science/articles/10.3389/fmars.2020.520223/full ↑13

R. Ballesteros, D. S. Intrigliolo, J. F. Ortega, et al., “Vineyard yield estimation by combining remote sensing, computer vision and artificial neural network techniques”, Precision Agriculture, vol. 21, n.º 6, pp. 1242–1262, dic. 2020. ↑14, 15

S. Fei, M. A. Hassan, Y. Xiao, et al., “UAV-based multi-sensor data fusion and machine learning algorithm for yield prediction in wheat”, Precision Agriculture, vol. 24, n.º 1, pp. 187–212, febr. 2023. ↑14, 15

Y. Yokoyama, A. de Wit, T. Matsui, et al., “Accuracy and robustness of a plant-level cabbage yield prediction system generated by assimilating UAV-based remote sensing data into a crop simulation model”, Precision Agriculture, vol. 25, n.º 6, pp. 2685–2702, dic. 2024. ↑14, 15

L. Costa, J. McBreen, Y. Ampatzidis, et al., “Using UAV-based hyperspectral imaging and functional regression to assist in predicting grain yield and related traits in wheat under heat-related stress environments for the purpose of stable yielding genotypes”, Precision Agriculture, vol. 23, n.º 2, pp. 622–642, abr. 2022. ↑14, 15

A. Feng, J. Zhou, E. Vories, et al., “Prediction of cotton yield based on soil texture, weather conditions and UAV imagery using deep learning”, Precision Agriculture, vol. 25, n.º 1, pp. 303–326, febr. 2024. ↑15, 16

E. C. Tetila, B. B. Machado, G. Astolfi, et al., “Detection and classification of soybean pests using deep learning with UAV images”, Computers and Electronics in Agriculture, vol. 179, art. 105836, dic. 2020. Disponible en: https://linkinghub.elsevier.com/retrieve/pii/S016816991831055X ↑16

F. H. Iost Filho, W. B. Heldens, Z. Kong, et al., “Drones: innovative technology for use in precision pest management”, Journal of Economic Entomology, vol. 113, n.º 1, pp. 1–25, febr. 2020. ↑16

M. Darbyshire, S. Coutts, P. Bosilj, et al., “Review of weed recognition: a global agriculture perspective”, Computers and Electronics in Agriculture, vol. 227, n.º 1, dic. 2024. ↑16

J. Kaivosoja, J. Hautsalo, J. Heikkinen, et al., “Reference measurements in developing UAV systems for detecting pests, weeds, and diseases”, Remote Sensing, vol. 13, n.º 7, abr. 2021. ↑16

R. Rosle, N. N. Che’Ya, Y. Ang, et al., “Weed detection in rice fields using remote sensing technique: a review”, Applied Sciences-Basel, vol. 11, n.º 22, nov. 2021. Disponible en: https://doi.org/10.3390/app112210701 ↑16

M. H. M. Roslim, A. S. Juraimi, et al., “Using remote sensing and an unmanned aerial system for weed management in agricultural crops: a review”, Agronomy-Basel, vol. 11, n.º 9, sept. 2021. ↑16

W. Guo, Z. Gong, C. Gao, et al., “An accurate monitoring method of peanut southern blight using unmanned aerial vehicle remote sensing”, Precision Agriculture, vol. 25, n.º 4, pp. 1857–1876, ag. 2024. ↑16, 18

W. Bao, W. Liu, X. Yang, et al., “Adaptively spatial feature fusion network: an improved UAV detection method for wheat scab”, Precision Agriculture, vol. 24, n.º 3, pp. 1154–1180, jun. 2023. ↑16, 18

B. Das, y C. S. Raghuvanshi, “Advanced UAV-based leaf disease detection: deep radial basis function networks with multidimensional mixed attention”, Multimedia Tools and Applications, dic. 2024. Disponible en: https://doi.org/10.1007/s11042-024-20462-x ↑17

A. K. Sangaiah, F.-N. Yu, Y.-B. Lin, et al., “UAV T-YOLO-rice: an enhanced tiny YOLO networks for rice leaves diseases detection in paddy agronomy”, IEEE Transactions on Network Science and Engineering, vol. 11, n.º 6, pp. 5201–5216, nov. 2024. Disponible en: https://ieeexplore.ieee.org/document/10387738 ↑17, 18

M. Gavrilovic, D. Jovanovic, P. Bozovic, et al., “Vineyard zoning and vine detection using machine learning in unmanned aerial vehicle imagery”, Remote Sensing, vol. 16, n.º 3, febr. 2024. ↑17, 18

A. Barreto, F. R. I. Yamati, M. Varrelmann, et al., “Disease incidence and severity of cercospora leaf spot in sugar beet assessed by multispectral unmanned aerial images and machine learning”, Plant Disease, vol. 107, n.º 1, pp. 188-200, en. 2023. ↑18

D. Percival, K. Anku, y J. Langdon, “Phenotype, phenology, and disease pressure assessments in wild blueberry fields through the use of remote sensing technologies”, Acta Horticulturae, n.º 1381, pp. 123–130, nov. 2023. Disponible en: https://www.actahort.org/books/1381/1381_17.htm ↑17, 18

G. B. C. Narayanappa, S. H. Abbas, L. Annamalai, et al., “Revolutionizing UAV: experimental evaluation of IoT-enabled unmanned aerial vehicle-based agricultural field monitoring using remote sensing strategy”, Remote Sensing in Earth Systems Sciences, vol. 7, n.º 4, pp. 411–425, dic. 2024. Disponible en: https://doi.org/10.1007/s41976-024-00134-y ↑17

S. Liu, D. Yin, H. Feng, et al., “Estimating maize seedling number with UAV RGB images and advanced image processing methods”, Precision Agriculture, vol. 23, n.º 5, pp. 1604–1632, oct. 2022. ↑19

X. Yu, D. Yin, H. Xu, et al., “Maize tassel number and tasseling stage monitoring based on near-ground and UAV RGB images by improved YoloV8”, Precision Agriculture, vol. 25, n.º 4, pp. 1800–1838, ag. 2024. ↑19

W. Liu, J. Zhou, B. Wang, et al., “IntegrateNet: a deep learning network for maize stand counting from UAV imagery by integrating density and local count maps”, IEEE Geoscience and Remote Sensing Letters, vol. 19, pp. 1–5, jun. 2022. Disponible en: https://ieeexplore.ieee.org/document/9807329 ↑19

A. Bawa, S. Samanta, S. K. Himanshu, et al., “A support vector machine and image processing based approach for counting open cotton bolls and estimating lint yield from UAV imagery”, Smart Agricultural Technology, vol. 3, febr. 2023. ↑20

M. Smajlhodžić-Deljo, M. Hundur Hiyari, L. Gurbeta Pokvić, et al., “Using data-driven computer vision techniques to improve wheat yield prediction”, AgriEngineering, vol. 6, n.º 4, pp. 4704–4719, dic. 2024. Disponible en: https://www.mdpi.com/2624-7402/6/4/26920 ↑20

O. Ameslek, H. Zahir, S. Mitro, et al., “Identification and mapping of individual trees from unmanned aerial vehicle imagery using an object-based convolutional neural network”, Remote Sensing in Earth Systems Sciences, vol. 7, n.º 3, pp. 172–182, sept. 2024. Disponible en: https://doi.org/10.1007/s41976-024-00117-z ↑20

T. Kattenborn, J. Eichel, y F. E. Fassnacht, “Convolutional neural networks enable efficient, accurate and fine-grained segmentation of plant species and communities from high-resolution UAV imagery”, Scientific Reports, vol. 9, n.º 11, art. 17656, nov, 2019. ↑20

T. Kattenborn, J. Leitloff, F. Schiefer, et al., “Review on convolutional neural networks (CNN) in vegetation remote sensing”, ISPRS Journal of Photogrammetry and Remote Sensing, vol. 173, pp. 24–49, mzo. 2021. ↑20

Cómo citar

APA

ACM

ACS

ABNT

Chicago

Harvard

IEEE

MLA

Turabian

Vancouver

Descargar cita

Licencia

Derechos de autor 2024 Elías Buitrago Bolívar, John Alexander Rico Franco, Sócrates Rojas Amador

Esta obra está bajo una licencia internacional Creative Commons Atribución-CompartirIgual 4.0.

Esta licencia permite a otros remezclar, adaptar y desarrollar su trabajo incluso con fines comerciales, siempre que le den crédito y concedan licencias para sus nuevas creaciones bajo los mismos términos. Esta licencia a menudo se compara con las licencias de software libre y de código abierto “copyleft”. Todos los trabajos nuevos basados en el tuyo tendrán la misma licencia, por lo que cualquier derivado también permitirá el uso comercial. Esta es la licencia utilizada por Wikipedia y se recomienda para materiales que se beneficiarían al incorporar contenido de Wikipedia y proyectos con licencias similares.