DOI:

https://doi.org/10.14483/22487085.3150Published:

2007-01-01Issue:

No 9, (2007)Section:

Theme ReviewImplications of multimodal learning models for foreign language teaching and learning

Keywords:

discurso multimodal, lecto escrituras, principios y diseños multimodales (es).Keywords:

multimodal discourse, literacies, multimodal principles, design (en).Downloads

How to Cite

APA

ACM

ACS

ABNT

Chicago

Harvard

IEEE

MLA

Turabian

Vancouver

Download Citation

Colombian Applied Linguistics Journal, 2007-09-00 vol: nro:9 pág:174-199

Implications of Multimodal Learning Models for foreign language teaching and learning

Miguel Farías

E-mail: mfarias@usach.cl

Katica Obilinovic

E-mail: kobilinovic@usach.cl

Roxana Orrego

E-mail: rorrego@usach.cl

Abstract

This literature review article approaches the topic of information and communications technologies from the perspective of their impact on the language learning process, with particular emphasis on the most appropriate designs of multimodal texts as informed by models of multimodal learning. The first part contextualizes multimodality within the fields of discourse studies, the psychology of learning and CALL; the second, deals with multimodal conceptions of reading and writing by discussing hypertextuality and literacy. A final section outlines the possible implications of multimodal learning models for foreign language teaching and learning.

Key words: multimodal discourse, literacies, multimodal principles and design

Resumen

En este artículo se hace una reseña de la literatura en torno al impacto de las tecnologías de la información y comunicaciones desde la perspectiva de su impacto en el proceso de aprendizaje de la(s) lengua(s), con particular énfasis en los diseños multimodales más pertinentes según indican los modelos de aprendizaje multimodal. En la primera parte se contextualiza la multimodalidad en el campo de los estudios del discurso, la psicología del aprendizaje y CALL y, en la segunda, se aborda la lectura y escritura multimodal por medio de la discusión de los conceptos de hipertextualidad y lecto escritura. La sección final plantea las posibles implicaciones de los modelos de aprendizaje multimodal para los procesos de enseñanza y aprendizaje de lenguas extranjeras/segundas.

Palabras Claves: discurso multimodal, lecto escrituras, principios y diseños multimodales.

Introduction

Our interest in multimedia learning can be attributed to several questions we posed to ourselves in trying to understand the impact of information and communications technologies (ICT’s) on our lives as citizens, individuals and teacher educators. As we notice a differential in computer literacy skills among generations of colleagues and learners, we also observe variations in the cognitive skills that these technologies are making possible. Turkle (1984, 1995, in press) was a pioneering voice in this respect as she tried to give an account, from a psychosocial perspective, of how identities are constructed in the virtual realities created via Internet. For her, ICT’s have introduced new tools that we use to think; consequently, the ways in which we think would also change. New identities are born that are tethered to communications devices and things able to be reached by them; a tethered self who is "always- on/always-on-us" (a play on words that implies that technological gadgets are always activated and whose presence is always haunting us), shaped by this world of rapid response and whose success is measured by calls made, e-mails answered, contacts reached, (Turkle in press: 16). From the perspective of applied linguistics, we are particularly interested in the effects that this emergent and changing scenario is having on the processes of language production and interpretation.

The changes introduced by ICT’s in the cultural landscape have been approached as a new revolution labelled by some authors (Turkle 1995, Vandendorpe 1999, Avila 2006) as "from page to screen". Some of these reseachers have tried to make a parallel between the invention of the printing press and the widespread use of computers as impacting revolutions for human societies. Still, some others are more critical and do not jump into the bandwagon of success with such ease and mention that the same inequalities in the access to books can be observed in the access to computers, which creates what some call the digital divide (Piscitelli 2004). Notwithstanding that access is still a problem that permeates our Latinamerican societies; it is evident that our conceptions of language and communication have shifted radically with the arrival of the computer and its digital capabilities. Just take the possibility of cutting and pasting and compare it (if you belong to the typographic generation) to the same processes but using a typewriter.

Communications, on the other hand, have also changed radically; again, just compare (we are writing at Christmas time) how many cards you wrote and received fifteen years ago, to the few (if any) cards you wrote and received now: probably, most of them are in your computer’s memory or in some virtual site, or they were email messages exchanged some times among multiple recipients. As Crystal (2001:238) claims, the electronic revolution is also bringing about a linguistic revolution, for "Netspeak is something completely new. It is neither ‘spoken writing’ nor ‘written speech’" (see also Farías 2003).

Such change has obviously affected the school communities and the relationships established between teachers and students now include other modes of cognitive involvement and social interactions made possible by digital online communications. This diversity in language processing skills has led some authors to coin the concept of multiliteracies to account for the new competences that the digital era require and that include visual literacy, TV literacy, computer literacy (Rocap 2003, Cope and Kalantzis 2000). Actually, the compiling work by Cope and Kalantzis (2000) summarizes the concerns that a group of scholars (known as the New London Group) evidenced as a result of their discussion on issues of literacy pedagogy, social futures and their implications for language teaching. The socio-cultural context in which these new modes of producing and comprehending language emerged and the implications that they may have for literacy education projects have been successfully reviewed by Clavijo and Quintana (2004) in the first part of their book, which dedicates the second part to provide illustrations from students and teachers who explored the world of hypertextuality. We consider the experience by these two Colombian researchers a solid and ground breaking contribution to the applications of contextualized models of digital literacy both for mother and foreign language teaching and learning. As for the Chilean context Farías (2004a and 2004b) has introduced to the specialized TEFL community the issues of multimodal learning and language teaching.

In this paper, firstly, we are going to place the issue of multimedia learning in the larger context of the effects of ICT’s in the creation of intesubjectivities by reviewing the literature coming from discourse studies, research in the psychology of learning, and the lines of research known as Computer Assisted Language Learning (CALL); secondly, we discuss the implications of the new modes of language representation and production afforded by ICT’s with special reference to hypertextuality and literacy; and finally we approach the concept of multimedia learning and its possible implications for foreign language learning and teaching. A final caveat in this introduction is to mention that most of the discussion and potential applications of the literature here reviewed certainly apply to learning in general; however, our natural fetters are second/foreign language learning and it is here where experimental research is badly needed.

1. Multimodal discourse and semiotic models of text interpretation.

Authors like Kress and van Leeuwen (1996, 2001) have paved the way to introduce the discussion on new modalities of textual presentation that they call multimodal discourse. These new types of discourse would require a semiotic treatment as they are produced and interpreted by resorting to several codes: images, layout, letters, colors, sound. Their work centers on understanding the changing portrayals of information brought about by new language processing technologies; particular interest is paid by them to the increasing importance of visual communication and the replacement of the traditional written texts for more visually charged texts. Kress and van Leeuwen (2001) set the ground for a semiotic and discourse account of multimodal texts by investigating communication as "a process in which a semiotic product or event is both articulated or produced and interpreted or used" (p. 20). Previously, Kress and van Leeuwen (1996) had explored these issues by looking at what they called the ‘grammar of visual design’ that was needed to understand the meanings conveyed by images. Such interpreting skills would be at the heart of visual literacy. One important point they raised for our concerns as educators is the value of visual texts in the life of students outside the school, as opposed to the prominence of written texts in the school curriculum.

Kress, Jewill, Ogborn and Tsatsarelis (2001) continue the tradition set by Kress and van Leeuwen by exploring multimodality in the science classroom. For them, language is one of a multiplicity of modes of communication that are active in the classroom. One of their suggestions for teacher education is that "teachers must be given the means to become highly reflexive of their practice.....particularly of the interaction between modes and shape of knowledge, and between modes and potentials for receptiveness by the students" (p. 177). For the purpose of this review, we here make the distinction between two concepts, multimedia and multimodality, which need to be operationally defined as they are central to comprehend the principles underpinning the models we have reviewed. Multimedia refers to the idea that the instructor uses more than one presentation medium, whereas multimodality refers to the idea that the learner uses more than one sense modality (Mayer and Sims 1994). Despite such distinction, there is no agreement in the literature as both terms are often used interchangeably.

1.1. Multimedia learning, models and principles.

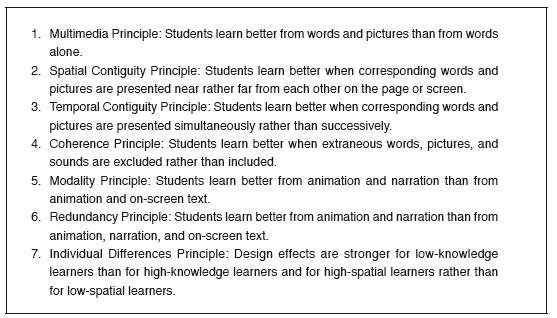

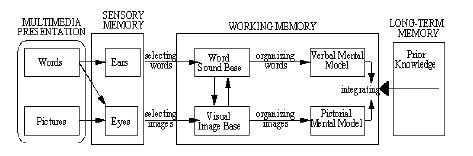

Now, dealing with the effects that these new modes of information representation are having on the learning process, Mayer (2001, 2005a) and Schnotz (2005) have worked on two complementary models. Mayer’s model of multimedia learning is based on the assumption that learners can comprehend better when content material is presented in words and pictures. In presenting his theory, Mayer (2001) includes the discussion about three views of multimedia, two views of multimedia design, two metaphors of multimedia learning, three kinds of multimedia learning outcomes, two kinds of active learning, and seven principles of multimedia design. Using an attractive and pedagogical discourse, Mayer (2001) looks at multimedia from three perspectives: as delivery media (combining two or more delivery devices, as overhead projector and the lecturer’s voice), presentation modes (representations that include words and pictures, as on-screen text and animation) and sensory modalities (visual and auditory senses, as used to process slides and narration, for example). Supported by Paivio’s dual-codes or dual-channels theory that asserts that humans possess separate channels for processing visual and auditory information, Mayer focuses on the presentation mode as more consistent with a cognitive view of human learning. As Figure 2 shows, his multimedia learning theory combines pictorial and verbal channels that are integrated in working memory together with the learner’s prior knowledge from long term memory.

Following a similar rationale, he opts for a view of multimedia design as learner-centered rather that technology-centered; a view that also inspires our work when we look historically into the promises of technologies for learning which have not yielded the expected results as the emphasis has been on technology rather than learning. In this respect, Lajoie (2000) makes the following comment: Changes in the availability and flexibility of technologies are allowing for greater creativity in the ways in which these technologies are used for education and training¡ and asks this question: "are these changes in educational use driven by learning and instructional theories, or do the technological advances drive them?" (p. xvii). As concerns Mayer’s metaphors, again, he subscribes to an approach to multimedia learning as knowledge that is constructed via sense-making activities rather than as information that is acquired and stored by a passive being (cf the empty vessel metaphor). This view, in turn, is consistent with Mayer’s (1997) evolving theory of learning consisting of three stages: response strengthening, information processing and knowledge construction. As for the outcomes of multimedia learning, measured in terms of retention and transfer, three are the possibilities: no learning (both poor retention and tranfer), rote learning (good retention and poor transfer) and meaningful learning (good retention and good transfer). If meaningful learning is to be promoted, then active learning should be encouraged in its two kinds: behavioral activity and cognitive activity. Regarding meaningful learning, Mayer (2001) writes: "My point is that well-designed multimedia instructional messages can promote active cognitive processing in learners, even when learners seem to be behaviorally inactive" (p. 19). Then, in addressing the issue of multimedia design, seven principles are postulated:

Figure 1.Taken from ‘Seven research-based principles for

the design of multimedia messages ́, (Mayer 2001: 184).

A somewhat different set of principles is presented in Mayer (2005b) where a personalization principle is introduced that claims that there is deeper learning when words are presented in conversational style rather than formal style. Mayer (2005 b) also adds two more principles: an interactivity principle, deeper learning occurs when learners are allowed to control the presentation rate than when they are not; and a signalling principle: deeper learning takes place when key steps in the narration are signaled rather than nonsignaled. Designers of TEFL materials should, then, pay attention to these principles when elaborating multimodal texts.

Figure 2. Mayer ́s cognitive theory of multimedia learning. Taken from ‘Multimedia Learning: guiding visoespatial thinking with instructional animations’ (Mayer 2005b: 480)

On the other hand, Schnotz (2005) has proposed the Integrated Model of Text and Picture Comprehension (ITPC) which attempts to account for "how individuals understand text and pictures presented in different sensory modalities"(p. 67). Following the dual-coding concept of Paivio, a verbal system and an image system, having different forms of mental codes, are postulated for the human mind. Schnotz, however, departs from the dual-coding theory by suggesting that "multiple representations are formed both in text comprehension and in picture comprehension" (p. 54). When it comes for Schnotz to mention the instructional implications of his model, he highlights the commonalities between his model and Mayer’s in discarding any rule of thumb that may suggest that the sole fact of using multiple forms of representations and multiple sensory channels can automatically lead to effective multimedia learning. Contrary to that, Schnotz recognizes that the success of multimedia learning is based on "an understanding of human perception and human cognitive processing based on careful empirical research" (p. 65). Then, these implications are aimed primarily at instructional material designers for them to "resist the temptation to add irrelevant bells and whistles to multimedia learning environments" (p. 65). However, the ITPC model departs from Mayer’s in postulating three extra principles: the picture-text sequencing principle, the structure-mapping principle, and the general redundancy principle. The picture-text sequencing principle simply states that if a picture and written text cannot be presented simultaneously, the picture should be presented before the text. The structure- mapping principle has a long-term memory replicating effect in that it postulates that among several pictures to visualize the same subject matter, the picture with the visualization that is most appropriate for solving future tasks should be chosen. Finally, the general redundancy principle claims that pictures and text should not be combined if learners have sufficient prior knowledge and cognitive ability to build a mental model from either picture or text.

A concluding remark on these models of multimodal learning that appeals to the necessary learner’s cognitive involvement comes from Schnotz (2002) as he asserts that "visuo-spatial text adjuncts and other forms of visual displays can support communication, thinking, and learning only if they interact appropriately with the individual’s cognitive system" (p. 113).

As with many a new area of instructional research and practice, these models and principles should be widely tested in contextualized settings to prove their applicability. It is the purpose of this paper to set the grounds for such research in the field of teaching and learning English in Latinamerican environments so we can eventually adhere to or disclaim criticisms that suggest that "as student interest in multimedia courses increases, learning tends to decrease because students may feel that learning in these courses requires less work" (Clark and Feldon 2005: 111).

1.2. Computer Assisted Language Learning (CALL), Multimedia and SLA.

In English language teaching and learning (ELTL) the field of computer assisted language learning (CALL) has been the bridge for language teachers and researchers to approach the impact of ICT’s in the classroom. Multimedia was introduced by Warschauer (2002) as one of the latest developments of CALL in what he called "integrative CALL", which was marked by the advent of multimodal software, hypermedia, Internet, www and CD-Roms. On the other hand, Chapelle (2001) discussed computer applications in three areas of SLA: computer-assisted second language learning, computer-assisted second language assessment, and computer-assissted second language research. Although most of her analysis is technology-based, ie, on how the computer can be an aid in the three areas mentioned, her words when dealing with the issue of evaluating CALL tasks resonate closer to our concerns: "Tasks not intended to promote learning in more than an incidental way, may be good for other purposes, but it would be difficult to argue that they should play a central role in L2 teaching.[...] in designing language learning tasks, the criteria of language learning potential should be considered the most important"; (Chapelle 2001: 58).

Plass and Jones (2005) synthetize the concerns we have been dealing with so far, our interest in how second language learning can benefit from multimedia, by integrating the models of multimedia learning and second language acquisition. For multimedia, they adopt Mayer’s model of multimedia learning and for SLA they follow the interactionist model proposed by Chapelle and take some elements from Ellis’s model of SLA as they ask themselves the question: "In what way can multimedia support second-language acquisition by providing comprehensible input, facilitating meaningful interaction, and eliciting comprehensible output?" (Plass and Jones 2005:471). Their integrated model of SLA with multimedia allows them to describe the cognitive processes involved and the possible strategies to support them using multimedia.

2. The impact of the multimodal/digital revolution on the processes of reading and writing.

It is a fact that texts come in different formats and make use of different modes of communication. We are living an explosion of the digital era in which the notion of text has changed dramatically. We are certain that as linguists or applied linguists, we have been interested in visualizing and analysing texts as a purely linguistic phenomenon. However, these days texts can no longer be thought of or seen as such since most of the them combine visual and written modes of representing information. In this regard, Jewitt (2005: 317) states: "Until recently the dominance of image over word was a feature of texts, on screen and off screen: there are more images on screen and images are increasingly given a designed prominence over the written elements." Along the same lines, she agrees that "Despite the multimodal character of screen- based texts and the process of text design and production, reading educational policy and assessment continue to promote a linguistic view of literacy and linear view of reading" (p.330).

This revolution has been in the landscape of communication for quite a while. It has been taking place over the past 30-40 years now. If we look at the old textbooks, magazines and newspapers, we can see that they were covered in print. On the contrary, the newspapers in 2007 combine images with text (Kress, 2000). All this means that a textual shift has occurred and we, as linguists, should be aware of this, even more so about the effects that such a change has brought to the processes of reading and writing.

So far, the old theories of reading have been based on an ideal representation of text which highlighted the linearity of presentation of information (Gough, 1985; LaBerge & Samuels, 1974, 1985; Samuels, 1985). This text was conceived as linear, closed and finished. In this new scenario, our own conceptualizations about these processes seem old-fashioned and need to be reviewed in order to re-address reading and writing from a multimodal perspective. In this sense, it is important to open our eyes towards the effects that multimodality has on reading and writing. In what follows, we will discuss briefly this impact. It is not the purpose of this article to cover all the discussion around these topics.

We assume that the reading of multimodal texts is a different process from the reading of print-based texts or monomodal texts. Kress and van Leeuwen (1996, 2001) have challenged the notions of traditional literacy’s emphasis on print in the light of the growing dominance of multimodal texts and digital technology. This means that reading comprehension theories based on only printed-texts can not give an account on the way people process multimodal texts containing images, print, sound, and movement. According to Kress (1997, 2003), new types of texts require different conceptualisations and a different way of thinking. This author also states that writing relies on the logic of speech while graphics rely on the logic of image. As a consequence, the reading of visual information would involve quite a different process than the reading of printed words.

What comes next is related to the implications that multimodality has in the processes of reading and writing. We will start by comparing and analyzing the way reading might differ when a reader reads a multimodal text as opposed to reading a monomodal text. The first thing to remember is that a multimodal text is one that combines different representational levels of information by making use of different formats. In other words, a multimodal text can be composed of images and text or images, text and sound among other modes.

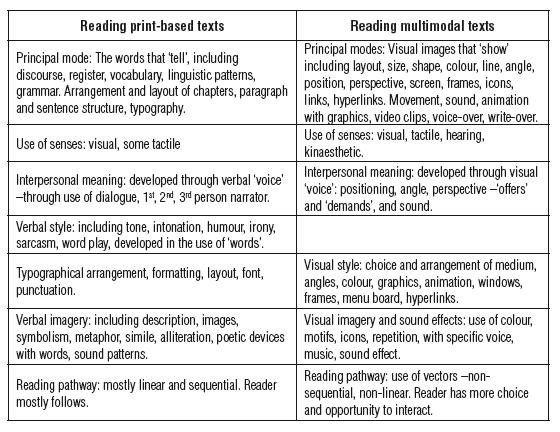

Walsh (2006) defines multimodal texts as "those texts that have more than one ‘mode’, so that meaning is communicated through a synchronisation of modes." Written text is only one part of a multimodal message and different modes are orchestrated together to make meaning. Within this new scenario, the associated multimodal reading comprehension process must be different to reading from print-based texts. In this way, Walsh (2006) proposes some similarities and differences between reading in a multimodal and a monomodal (print-based) environment. The following table summarizes these differences.

Figure 3. Taken from "Differences between reading of print-based and multimodal texts". Walsh (2006:35).

Accordingly, Kress (2003) visualizes reading from multimodal texts as reading as semiosis. When speaking about the current landscape of communication, Kress argues that there has been a move from ‘telling’ the world to ‘showing’ the world. He mentions that this change points to a profound shift in the act of reading which, as he says, can be characterised by phrases such as ‘reading as interpreting’ and `reading as ordering’. The idea behind his statements is that we cannot think narrowly of making meaning exclusively from written text nowadays. Then, from these differences we can infer that reading from multimodal texts involves establishing different reading paths. To some extent, reading from a monomodal text involves reading sequentially. On the contrary, when students (and readers in general) are exposed to hypertexts or multimodal texts, it is quite difficult to establish which reading paths they make use of. As a consequence, there are authors such as Mayer and Moreno (2003) who claim that multimedia texts impose a strong cognitive load upon the working memory and Mayer’s multimodal principles would be, then, a model that identifies and evaluates the weaknesses and strengths of different designs in terms of their potential for retention and transfer, two essential processes involved in learning another language.

2.1. Writing and hypertextuality

In relation to the effects that multimodality might have in order to understand the process of writing, different and controversial opinions are involved. For instance, Jewitt (2005) argues that print-based reading and writing have been always multimodal. She states that this occurs because they require the interpretation and design of visual marks, space, colour, font or style, and increasingly image, and other modes of representation. She also points out that "the new technologies emphasize the visual potential of writing in ways that bring forth new configurations of image and writing on screen: font, bold, italic, colour, layout, and beyond¡ (Jewitt, 2005: 321). Currently, new technologies allow people to use many computer applications to design and write well. In fact, the advantages of word processing enable students to design and redesign their written texts. These benefits allow students to alter the page set up, to change the margins, to move from different font styles and sizes, to import and delete images, and so on. The new Microsoft Word capabilities enable writers to combine different formats to represent the information. Whenever students make use of these new Microsoft Word affordances, they have to make decisions and negotiations about the design of their writings. These include whether to use a given font or border and whether to import images from "clip art", among many others.

Besides, authors like Braaksma, Rijlaarsdam, Couzijn & van den Bergh (2002) state that the main difference between composing hypertext and linear text relies on the way people structure the information. According to them, hypertext allows students to structure the information following a hierarchicalization process, while for linear writing a linearization process is used.

There are other authors like Clavijo and Quintana (2004) who visualize the affordances of hypertext as potentially motivating for developing the writer’s creativity. In this regard, they argue that the possibility of creating hypertexts allow students to move from a writing process traditionally centered and linear to a process which can enhance multilinearity and hypertextuality. They have developed a project with English Pedagogy students in the Colombian University Francisco José de Caldas where they write "hyperstories" by making use of all the affordances that hypertext offers nowadays.

Along the same lines, Douglas (2007) highlights the potentiality of hyperstories. He calls them "interactive narratives" (narratives written in hypertext). According to him, hypertext narratives encourage readers to shape the outcomes of the stories they read by the decisions they make in the reading process. In other words, people can create their own narratives by making selections that the software allows them to make. Some programs that are used for this are Apple’s HyperCard and Nelson’s Literary Machines, among others in the market.

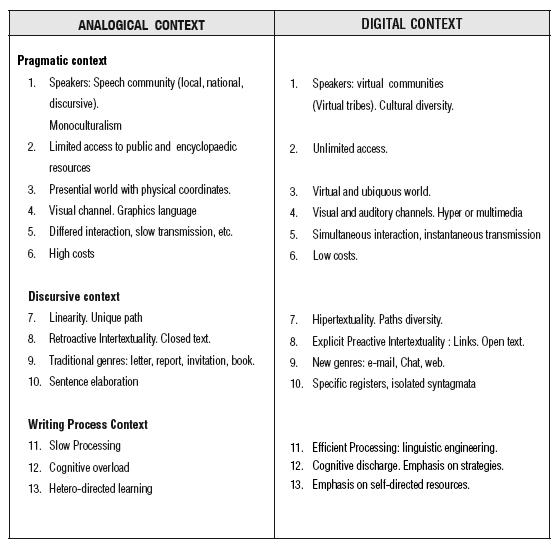

Some authors are positive about the effects that the digital change will bring to the process of reading and writing. Among them, Cassany (2000) points out that the writing of hypertexts will contribute to make the writing process more strategic and more effective. He believes that the digital change will allow people to self-direct their writing by making use of all the affordances that the digital platforms have to offer. We think that writing hypertexts and hyperstories, which are only two of the multiple possibilities that cyberspace has, will contribute to improve the writing process of our students. It is now our responsibility as teachers to evaluate and research the effects of the new digital technologies on the processes of text comprehension and language production. The effects of the digital change on writing is well summarized by Cassany (2000:4) in the following chart:

Figure 4. Taken from "De lo analógico a lo digital. El futuro de la enseñanza de la composición" (Cassany, 2000:4)

3. Computers and minds: Technology-centered research vesus learner centered research

The motivations underlying the research related to Information and Communication Technologies (ICT ́s) are varied. Knowing about the type of access that different cultural groups within a country have nowadays, finding out about the level of sophistication in the equipments used and the type of training that professionals and students need in order to keep up with the state of the art, are some examples of the type of research that is centered on technology. Although this is perfectly valid, the motivation behind our own research is different. It aims at finding out about the potential impact of the new technologies on learning, which means that our focus of attention is not on the technologies themselves but on how the mind of the learner is working, adjusting, benefiting from the exposure to ways of obtaining information that differ from the printed text.

The new modes that technology is offering invite us to think that new cognitions are needed on the part of those individuals who are processing information and constructing knowledge in a non-traditional manner and who have been doing so for decades now.

Are the new modes of presenting information making our youngsters ́ reading process more fluent and their level of comprehension deeper than the traditional flat text?, Is their "little black box" benefiting from the hypertextual, non-linear process of construction of knowledge? Are our screenagers (Brant 2003) reading faster, more fluently although less profoundly? Are they becoming intellectually better equipped to interpret the broad ideational complexity of a text as well as the details that are also part of it? Do images and movement matter more than the printed, flat text alone? These are some of the many questions that stem from our interest in learner-centered research in connection with the new technologies and the new literacies.

3.1. Re-visiting conceptions of language and learning

Throughout the history of linguistics and psychology the conceptions of "language" and "learning" have changed. Each definition has stemmed from the psychological and linguistic stance adopted at the moment. Brown (1994:5) presents eight of these attempts to characterize the concept of language, some of which are: language interpreted as systematic and generative, as symbols that are primarily vocal, language as communication. When defining the learning process, Brown (1994:7) also covers a broad spectrum of possibilities. He provides several characterizations: as synonymous with acquisition, which is obviously a controversial definition for those who draw a thick line between the processes of language learning and language acquisition, learning as retention of information (with the obvious implication of placing memory and learning together or at least as two sides of the same coin), learning as an active and conscious process. He does not leave out the behavioristic definition that focuses on a change of behaviour.

McCarthy (2001) summarizes the controversial positions regarding the conceptions of language and language learning nowadays. The strictly psycholinguistic perspective is based on the conception of language as an abstract system of rules that the child in his role as ";little linguist" is able to discover under the basic condition of having exposure to a given language. Although psycholinguists do not necessarily neglect the role of the environment, their focus is on what takes place inside the child’s mind. The sociolinguistic perspective, on the other hand, overemphasizes the social function and purpose of language.

These apparently conflicting perspectives are reflected on the explanations given to the language learning process in reference to its causative factors. On one hand, the human being’s genetic predisposition and, on the other, the power of the environment, which is defined in terms of the help that "motherese", also called "care-taker speech", i.e., the input provided by the person who takes care of the child and that is addressed to him, seems to give to the child through the important degrees of simplification that characterize it. The caretaker engages in social negotiation with the child by accommodating her/his speech in form and content to the child’s needs, co-constructing language.

These apparently conflicting perspectives are reflected on the explanations given to the language learning process in reference to its causative factors. On one hand, the human being’s genetic predisposition and, on the other, the power of the environment, which is defined in terms of the help that "motherese", also called "care-taker speech", i.e., the input provided by the person who takes care of the child and that is addressed to him, seems to give to the child through the important degrees of simplification that characterize it. The caretaker engages in social negotiation with the child by accommodating her/his speech in form and content to the child’s needs, co-constructing language.

3.2. Multimodality and Second Language Acquisition

When attempting to relate the multidisciplinary domains subsumed under multimodality and SLA, it is impossible to avoid the current controversy between the strictly psycholinguistic stance and the sociolinguistic position. This controversy is represented in the following quotes by Firth and Wagner and by Michael Long. Firth and Wagner ́s paper received a good amount of criticism and is the basis for the whole section on second language acquisition in the book edited by Seidlhofer (2003). Their defense is not necessarily directed at the exclusion of a cognitive stance in favor of an exclusively sociolinguistic one. In fact, they contend that:

Our ultimate goal is to argue for a reconceptualization of SLA as a more theoretically and methodologically balanced enterprise that endeavours to attend to, explicate, and explore, in more equal measures and, where possible, in integrated ways, both the SOCIAL and COGNITIVE dimensions of S/FL use and acquisition. (p.175)

In the same controversial article, these authors argue that:

Researchers working with a reconceptualised SLA will be better able to understand and explicate how language is used as it is being acquired through interaction, and used resourcefully, contingently, and contextually. Language is not only a cognitive phenomenon, the product of the individual’s brain; it is also fundamentally a social phenomenon, acquired and used interactively, in a variety of contexts for myriad practical purposes. (p.190)

Michael Long, one of the several researchers who reacted against Firth and Wagner, responds:

Whether F & W like it or not (they do not), most SLA researchers view the object of inquiry as in large part an internal, mental process: the acquisition of new (linguistic) knowledge. And I would say, with good reason. SLA is a process that (often) takes place in a social setting, of course, but then so do most internal processes – learning, thinking, remembering, sexual arousal, and digestion, for example – and that neither obviates the need for theories of those processes, nor shifts the goal of inquiry to a theory of the settings. A theory of memory, for example, deals with such matters as relationships among the frequency and intensity of instances of the phenomena an individual experiences and the subset that are remembered, storage and retrieval of same, and so on, but not, or not "centrally," at least, with the social events, for example, courtroom testimony or storytelling in a pub, during which memories are put to use. (p.207)

Although we adhere to the psycholinguistic position, which emphasizes the processes that occur in the learner’s mind, we believe that these two views do not have to be mutually exclusive, coinciding with Susan Gass (in Seidlhofer, 2003) when she states that:

Views of language that consider language as a social phenomenon and views of language that consider language to reside in the individual do not necessarily have to be incompatible. It may be the case that some parts of language are constructed socially, but that does not necessarily mean that we cannot investigate language as an abstract entity that resides in the individual" (p.227)

The knowledge that the learner’s little black box constructs is not constructed in a vacuum. We would like to see the interaction with "others" as a necessary springboard which feeds the mental processes in the active mind of the learner. It is precisely here where multimodality can play an important role. The use of multimedia presentations can contribute to a large extent to the design of an immediate surrounding similar to those contexts where the mother and her ΄motherese‘ interacts and negotiates meanings with the child’s mind. As a consequence, multimedia presentations can be an excellent means toward "re-constructing" a pseudo-natural environment in which these negotiations of meaning that serve as the platform or scaffold for second language acquisition can take place.

Accompanying the interaction between teacher and second language learners with a combination of modes such as animation (visuals and movement) and narration (not only on the part of the teacher but also from the animation) can provide a variety of contexts to be used for developing all the components of communicative competence. Some of these components include those rules of appropriateness that are better perceived and remembered when the relationship between the interlocutors and the immediate context in which these rules and formulas of appropriateness occur are clearly shown through the several modes that characterize our daily life.

Although sometimes used interchangeably, Mayer and Sims (1994) apply the concept of multimedia to the teacher’s presentation of information through more than one medium and the concept of "multimodality" to the learner’s use of more than one sense. Thus, they state that:

Multimedia learning occurs when students use information presented in two or more formats – such as a visually presented animation and verbally presented narration- to construct knowledge. In a strict sense, our definition applies to the term "multimodal" (which refers to the idea that the learner uses more than one sense modality) rather than "multimedia" (which refers to the idea that the instructor uses more than one presentation medium). (pp.389, 390)

The multimodality era goes beyond both the generative-psycholinguistic and the interactionist-sociocultural interpretations of language learning to consider several extra-linguistic representational modes (verbal, visual, musical, gestural, to name a few) and several media (books, CD-ROM, teacher’s body, sound). The drastic change in perspective derives from the new technological advances and is of interest to all those professionals whose disciplines deal, directly or indirectly, with language learning and communication.

Among the cognitively-oriented researchers whose focus of attention centers on the impact of multimodality and what goes on in the mind of the learner, Schnotz (2002) emphasizes the need to study the interaction between visual literacy and the individual’s cognitive structures. He explains that visual modes of presentation may enhance communication and learning processes as long as there is an adequate interaction with cognition. In other words, all different forms of visual displays accompanying flat or printed texts constitute a good source of research on learning and communication, provided they are direct or indirect factors intervening in the individual’s mental processes.

3.3. Collocational and Sociolinguistic Competences

When Larsen-Freeman (2003:14) explains that "a great deal of our ability to control language is due to the fact that we have committed to memory thousands of multiword sequences, lexicogrammatical units or formulas that are preassembled" and Lewis (2000:177) acknowledges that"...proficiency in a language involves two systems, one formulaic and the other syntactic....." they confirm what has already been contended by applied linguists with respect to the implications derived from Corpus Linguistics; specifically, the notion that the input produced- orally and in writing – by expert users of a given language – is not only the result of rule application but also the reproduction of multiword sequences that the speakers have memorized via rote.

Taking into account the correct terminology used in education in general, we would like to insist on the fact that knowing that something is the way it is (declarative knowledge) and knowing how to perform something well (procedural knowledge) are two different things. Consequently, knowing that an important percentage of the language we teach is made up of combinations that our students simply need to remember and use automatically does not necessarily help us discover the way to help them go through the process conducive to that desired fluency and appropriateness in the use of prefabricated speech.

Collocational competence (the use of canned speech) as well as sociolinguistic competence (rules of appropriateness), both essential components of the very inclusive concept of communicative competence, require special didactic treatment not only for the adequate presentation of rules and contexts but mainly for the necessary retention of information that the learners need and that happens to be a prerequisite for the progressive automatization on the part of the students. Multimedia messages can provide the context that helps bring a bit of reality into the classroom. This is not the same thing as believing that the objective of formal instruction is to replicate what happens in natural settings. We agree that the classroom can hardly substitute for the natural environment. In "the streets" as it were, the learner captures meanings in the totality of a series of scenes where s/he perceives gestures, where s/he hears noises and ideally listens to interlocutors and comprehends, and where s/he visualizes the broad field which is the framework behind the expression of meanings. Multimedia messages can become the means through which meanings can be grasped in the totality of complex, "almost" real scenarios.

3.4. Practice versus noticing

As Lewis (2000) explains, when our methodology was based on behavioural and structural principles, practice was conceived as a means towards automatization via the drilling of patterns. His lexical approach, primarily characterized by the subordination of the syntactic system of language to the formulaic system composed of prefabricated pieces, reformulates the essential condition for learning by changing the emphasis from practice into the need to encounter the new information several times. He explains his position saying that "a lexical approach suggests that it is repeated meetings with an item, noticing it in context, which converts that item into intake." (p.171)

Lewis admits that the process of noticing is not easily defined. However, whether we call it "noticing", "becoming aware of&iquot;, or simply "conscious attention given to" new information, this is a necessary but insufficient condition for learning. An individual may be using all his senses and concentration; however, if the information (rules or formulas to be memorized) is being provided through many sources, it may result in overloading the learner’s mind (cognitive load), which certainly impedes learning. This is a good example of the relationship between modes of presentation and cognitive processes. Seyed, Low and Sweller (1995:320) explain that the so-called "split attention effect" occurs "when students must split their attention between multiple sources of information, which results in a heavy cognitive load." They make reference to some experiments that have been conducted in the area of geometry whose results have led them to posit that "effective working memory may be increased by presenting material in a mixed rather than a unitary mode."

3.5. How multimodality can accelerate classroom learning.

The classroom as a metaphor has received at least two different interpretations in terms of what its main objective should be: the classroom as an artificial setting that cannot possibly be compared to "being there", experiencing the language in an English speaking environment, on the one hand, and the classroom as a potential mirror of "the street".

Larsen-Freeman ́s (2003) "reflex fallacy" intends to teach us that it is a place not designed for emulating the street but rather for improving what natural acquisition does for the learner. The second language learner should progress faster in a formal environment even if s/he engages in discovery procedures since the teacher’s input and capabilities can make up for the potentially chaotic exposure s/he could get in a natural environment. She points out (p.20) that:

I have referred to this as the reflex fallacy (Larsen-Freeman, 1995), the assumption that it is our job to re-create in our classrooms the natural conditions of acquisition present in the external environment. Instead, what we want to do as language teachers, it seems to me, is to improve upon natural acquisition, not emulate it. Accelerating natural learning is, after all, the purpose of formal education.

Multimedia presentations lend themselves to the adequate treatment of the formulaic component of language as well as the contextualization of the pragmatic and sociolinguistic aspect.

3.6. How multimodality can contribute to education

The cognitive perspective indicates that when the learner does not possess cognitive structures for the learning of a given topic, instead of absorbing the new information as we would expect, it happens to fall into a vacuum, leading to little or no learning. Whether we teach chemistry, history, physics or a foreign language after puberty, we teachers are all too familiar with the difficulties encountered by our less privileged students. Especially in heterogeneous classes or groups of language learners with mixed proficiency levels, we find it particularly hard to provide the tools needed when the "floor" from where they start is placed at very different levels.

Having to compensate for the lack of cognitive structures at least in some areas, together with the rudimentary development of verbal skills both in the first language and/or the second, constitute two of the many difficulties that teachers and professors must face. A relevant implication that derives from Schnotz ́ view is that knowledge maps specially help those learners who have low prior knowledge and those whose verbal skills are also rather basic.

A final consideration has to do with the present-day reality of state-funded schools in Chile, some of which are characterized by the existence of highly deprived communities. In fact, the ultimate goal of public schools in Chile is to develop in all learners the necessary skills to enable them to have access to tertiary education in particular, and to social mobility, in general.

Concluding remarks

The theoretical and practical implications presented in this paper seem to suggest that multimedia presentations could constitute one way to do just that: compensating for absence of appropriate cognitive structures in certain domains –precisely because of the lack of opportunities that characterize certain communities and social groups- and also compensating for the weak or incomplete development of verbal skills.

The research agenda calls for studies, among others, evaluating the principles of multimodal learning and the electronic multimedia designs as they affect the acquisition of reading, writing, speaking and listening in various EFL contexts. A multidisciplinary approach is needed to understand the social, cognitive, neurological, cultural and linguistic variables involved in processing multimodal discourse. Questions like the following can guide such agenda: what types of multimedia designs are more helpful for learners with different learning styles? As proficiency levels increase, are there designs that are more appropriate? What is the impact of the audio, linguistic, visual, gestural and spatial meaning making dimensions in the learning process? How can educators integrate these dimensions into a semiotic model of language learning? How can that model be also critical so learners evaluate the implications of multimedia designs? How is hypertextuality integrated and evaluated at the classroom level? Can teachers offer learners opportunities to select the processing mode that best fits their learning style?

The lines of research reviewed here can set the grounds for empirical investigations into the various arrangements and affordances that multimodality offers for the process of language learning. The quick pace of change from print-based to more visually oriented presentations of information involves also a quick response from language teachers and educators to take advantage of multimodality to engage learners in meaningful cognitive, social and critical understandings. Attention to the meaning-making potential of the various designs of multimodal discourse is an important component of visual literacy that can help language learners to cope more efficiently as they face new modes of information portrayal.

References

- Avila, R. (2006). De la imprenta a la internet. Mexico, DF: Colegio de Mexico.

- Braaksma, M., Rijlaarsdam, G., Couzijn, M. and van de Bergh, H. (2002). Learning to compose hypertext and linear text: Transfer or inhibition? In R. Bromme and E. Stahl (Eds.), Writing hypertext and learning: Conceptual and empirical approaches. Advances in Learning and Instruction Series (pp. 15-37). London: Pergamon.

- Brant, M. (2003). Log on and Learn. Newsweek. August.

- Brown, D. H. (1994). Principles of language learning and teaching. New Jersey: Prentice Hall Regents.

- Cassany, D. (2000). De lo analógico a lo digital. El futuro de la enseñanza de la composición. Revista Latinoamericana de Lectura, año 21, 2, pp. 1-11.

- Chapelle, C. (2001). Computer applications in second language acquisition. Cambridge: CUP.

- Clavijo, A., and Quintana, A. (2004). Maestros y estudiantes escritores de hiperhistorias. Una experiencia pedagógica en lengua materna y en lengua extranjera. Bogotá: Colección Textos Universitarios Universidad Distrital Francisco José de Caldas.

- Clark, R. and Feldon, D. (2005). Five common but questionable principles of multimedia learning. In Mayer, R.(Ed), The cambridge handbook of multimedia learning.(pp.97- 116). New York: CUP.

- Cope, B. and Kalantzis, M. (Eds). (2000). Multiliteracies. Literacy learning and the design of social futures. London: Routledge.

- Crystal, D. (2001). Language and the Internet. Cambridge: CUP.

- Douglas, Y. J. (2007). What hypertexts can do that print narratives cannot. Retrieved January 14, 2007, from http://www.nwe.ufl.edu/~jdouglas/reader.pdf.

- Farías, M. (2003). Análisis conversacional de un corpus reducido de discurso de una sala de chateo. In Valencia, A. (Ed.).Desde el Cono Sur (pp.153-162), Santiago: LOM. .

- Farías, M. (2004a). Textos multimodales en tiempos de competencias múltiples. Actas del XIV Congreso de SONAPLES, Osorno: Universidad de Los Lagos.

- Farías, M. (2004b). Multimodality in times of multiliteracies: implications for TEFL. In a Word, páginas. Santiago: TESOL Chile.

- Firth, A. & Wagner, J. (2003). On discourse, communication, and (some) fundamental concepts in SLA Research (Text 20), Section on SLA. In Seidlhofer, B. (Ed.), Controversies in applied linguistics (pp. 173-198). New York: CUP.

- Gass, S. (2003). Apples and oranges: Or, why apples are not orange and don ́t need to be. A response to Firth and Wagner(Text 24). In Seidlhofer B. (Ed.), Controversies in applied linguistics (pp. 220-231). New York: CUP.

- Gough, P. (1985a). One second of reading. In H. Singer & B. Ruddell (Eds.), Theoretical models and processes of reading (pp. 661 – 686). Newark, Delaware, IRA & Erlbaum.

- Jewitt, C. (2005). Multimodality, "reading", and "writing" for the 21st Century. Discourse: studies in the cultural politics of education, Vol. 26, N°3, pp. 315-331.

- Kress, G. y van Leeuwen, T. (1996). Reading images: The grammar of visual design. London: Routledge.

- Kress, G. R. (1997). Visual and verbal modes of representation in electronically mediated communication: the potentials of new forms of text. In I. Snyder (ed.) (1997) Page to screen. London: Routledge.

- Kress, G. R. (2000). Design and transformation. In B. Cope and M. Kalantzis (Eds.), Multiliteracies. London: Routledge.

- Kress, G. Leite-Garcia, R. and Van Leeuwen, T. (2000). Semiótica discursiva. In T. Van Dijk, (Ed), El Discurso con estructura y proceso (pp.373-416). Barcelona: Gedisa.

- Kress, Jewitt, Ogborn and Tsatsarelis (2001). Multimodal teaching and learning.London: Continuum.

- Kress, G. R. and van Leeuwen, T. (2001). Multimodal discourse: The modes and media of contemporary communication. London: Edward Arnold.

- Kress, G. R. (2003). Literacy in the new media age. New York: Routledge.

- LaBerge, D. and S. J. Samuels (1985). Toward a theory of automatic information processing of reading. In H. Singer & B. Ruddell (Eds.), Theoretical models and processes of reading (pp. 689 – 718). Newark, Delaware, IRA & Erlbaum.

- Lajoie, S. (Ed.). (2000). Computers as cognitive tools. No more walls. Mahwah, NJ: Lawrence Erlbaum.

- Lankshear. C. (1997). Changing literacies. Buckingham: Open University Press.

- Larsen-Freeman, D. (2003). Teaching language: From grammar to grammaring. Boston: Thomson-Heinle.

- Lewis, Michael (Ed.). (2000). Teaching collocation. Hove: Language Teaching Publications.

- Long, M. H. (2003). Construct validity in SLA research: A response to Firth and Wagner (Text 22). In: Seidlhofer, B. (Ed.), Controversies in applied linguistics (pp. 206-214). New York: CUP.

- Mayer , R. E. and Sims, K. V. (1994).For whom is a picture worth a thousand words? Extensions of a dual-coding theory of multimedia Learning. Journal of Educational Psychology, Vol. 86, No 3, 389-401.

- Mayer, R, (1997). Learners as information processors: Legacies and limitations of educational psychology’s second metaphor. Educational Psychologist, 31 (3/4), 151-161. Mayer, R. (2001). Multimedia learning. New York: CUP.

- Mayer, R.(Ed) (2005a), The cambridge handbook of multimedia learning. New York: CUP.

- Mayer, R. (2005b). Multimedia learning: Guiding visuospatial thinking with instructional animation. In Shah, P. and Miyake, A. (Eds), The cambridge handbook of visuospatial thinking. Cambridge, MA: CUP

- Mayer, R. And Moreno, R. (2003). Nine ways to reduce cognitive load in multimedia learning. Educational Psychologist, Vol 38, No1, pp. 43-52.

- McCarthy, M. (2001). Issues in applied linguistics. New York: Cambridge University Press.

- Mousavi, Y. S., Low, R., and Sweller, J.(1995). Reducing cognitive load by mixing auditory and visual presentation modes. Journal of Educational Psychology, Vol. 87, No 2, 319-334.

- Piscitelli, A. (2004). Internet. La imprenta del siglo XXI. Buenos Aires: Gedisa.

- Plass, J. and Jones, L. (2005). Multimedia learning in second language aquisition. In

- Mayer, R.(Ed), The cambridge handbook of multimedia learning (pp.467-488). New York: CUP

- Rocap, K. (2003). Defining and designing literacy for the 21st century. In Solomon, Allen and Resta (Eds.), Toward digital equity: Bridging the divide in education. Boston: Allyn and Bacon.

- Samuels, S. J. (1985). Toward a theory of automatic information processing of reading: updated. In H. Singer & B. Ruddell (Eds.), Theoretical models and processes of reading (pp. 719 – 721). Newark, Delaware, IRA & Erlbaum.

- Seidlhofer, Barbara (Ed.). (2003). Controversies in applied linguistics. New York: Oxford University Press.

- Schnotz, Wolfgang. (2002). Towards an integrated view of learning from text and visual displays. Educational Psychology Review, Vol. 14, No 1, 101-120.

- Schnotz, W. (2005). An integrated model of text and picture comprehension. In Mayer, R.(Ed), The cambridge handbook of multimedia learning. New York: CUP.

- Turkle, S. (1984). The second self: computers and the human spirit. New York: Simon and Schuster.

- Turkle, S. (1995). Life on the screen: identity in the age of Internet. New York: Simon and Schuster.

- Turkle, S. (in press). Always-on/always-on-you: The tethered self. In Katz, James (Ed.), Handbook of mobile communications and social change. Cambridge, MA: MIT Press.

- Vandendorpe, C. (1999). Del papiro al hipertexto. Buenos Aires: Fondo de Cultura Económica.

- Walsh, M. (2007). Reading visual and multimodal texts: how is ‘reading’ different? Australian Journal of Language and Literacy, Vol. 29, No 1: 24-37. Also available in Internet and retrieved January 14, 2007, from:

- http://www.literacyeducators.com.au/docs/Reading%20multimodal%20texts.pdf Warschauer, M. (2002). Technology and school reform: A view from both sides of the tracks. English Language and Technology, Vol. 8, No 4.

NOTAS

- The elaboration of this article was possible by support from DICYT USACH, Project 03065FF.

Creation date:

Metrics

License

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

Attribution — You must give appropriate credit, provide a link to the license, and indicate if changes were made. You may do so in any reasonable manner, but not in any way that suggests the licensor endorses you or your use.

NonCommercial — You may not use the material for commercial purposes.

NoDerivatives — If you remix, transform, or build upon the material, you may not distribute the modified material.

The journal allow the author(s) to hold the copyright without restrictions. Also, The Colombian Apllied Linguistics Journal will allow the author(s) to retain publishing rights without restrictions.